The simple background that i have implemented doesnt seem to be much useful. However, its a first step to the considerable amount of work that needs to be accomplished to get the background model right.

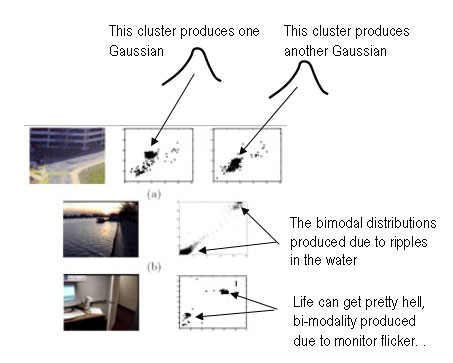

My background model detector needs to take care of scenes that start out with moving leaves of trees, or if time permits, it should also be able to process and store backgrounds that start off with some moving traffic, etc. The background also needs to update itself recursively, for e.g. there could be an instance where a person could come into the scene and leave an object behind, in which case the object becomes part of the background. Or there could be illumination changes in the scene.

I came across a paper by Haritaoglu et al., titled "W4: Real time surveillance of people and their activities" (

published in IEEE transactions on machine intellingence and pattern analysis, August 2000), where they describe a background model that can learn a background even if there are moving objects in the scene (e.g. traffic or leaves), and it can also adapts itself recursively using the so-called "support maps", hence allowing for objects that become part of the background later on.

W4, however, fails when there are sudden changes in illumination, in which case it "thinks" most of the background is the foreground. W4 tries to recover from such catastrophes by using a percentage indicator, i.e. if lets say more than 80% of the background is thought to be the foreground, reject the background model and build a new one immediately.

To begin with implementing W4 into my system, I required a median filter to distinguish between moving and stationary pixels. I am able to use the median filter from Intel's cv library, however, I still need to investigate how it can be used to distinguish moving and non-moving pixels.

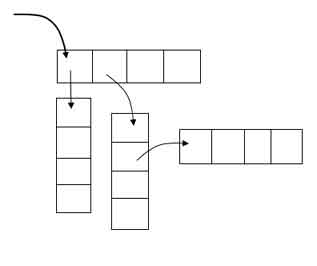

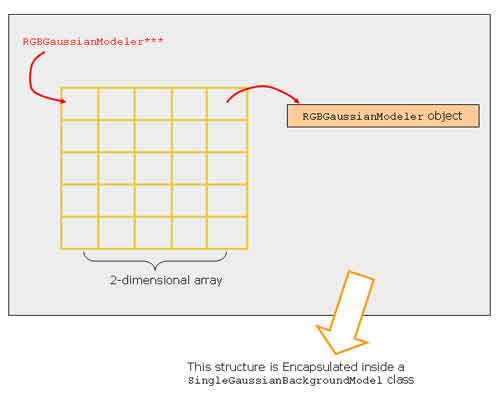

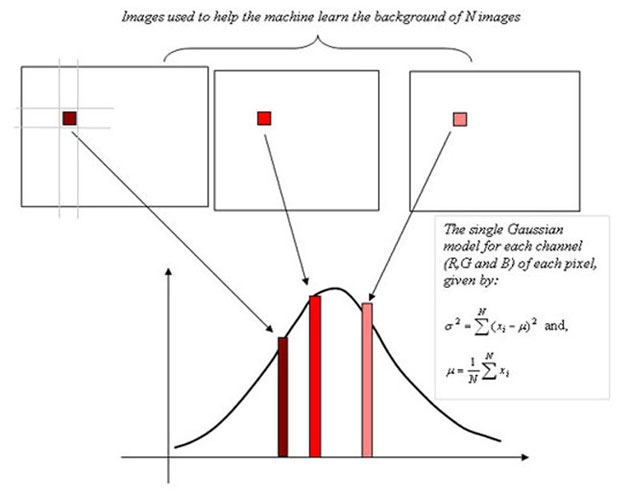

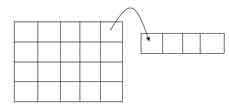

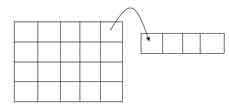

The next step is to store a vector (5 x 1) for each pixel, that is modelled as the background. For this i required a data-structure that looked something like this:

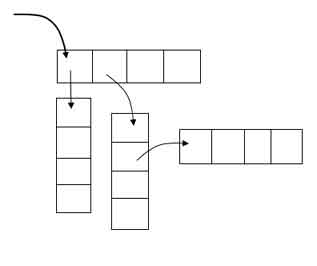

However, when you are trying to implement such a structure in C++, it ends up looking something like this: