My model had a fundamental problem. I had only three gaussian for the background model. One for each of the color channels (R,G and B), hence justifying its ability to properly detect differing colors.

However, this model had to be changed. Any background model should have three gaussian per pixel of the background that is to be learnt by the machine.

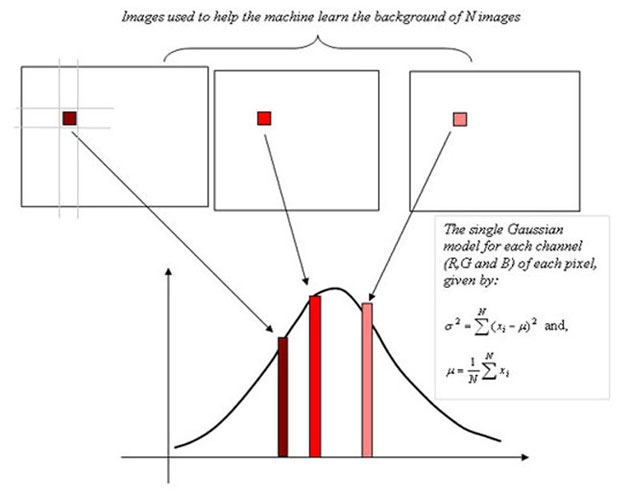

Here is an illustration of how this works, the system computes gaussians for each channel of each pixel of the background model

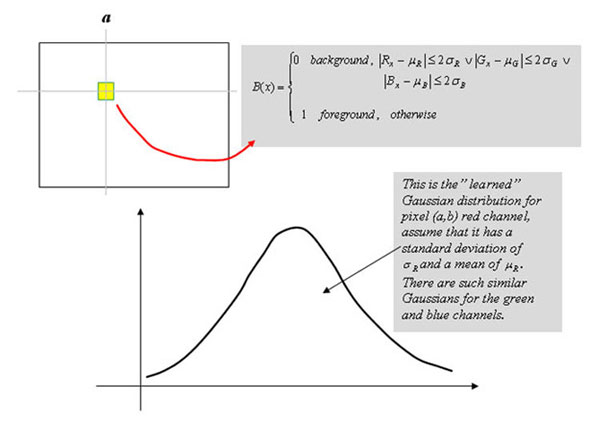

After the background is learnt (i.e. the single gaussians calculated), an image on which background subtraction is applied, undergoes the following tests as illustrated below:

No comments:

Post a Comment